Category Archives: Blog

Monitoring Job Queues: Setting Up Failure Notifications using Power Automate

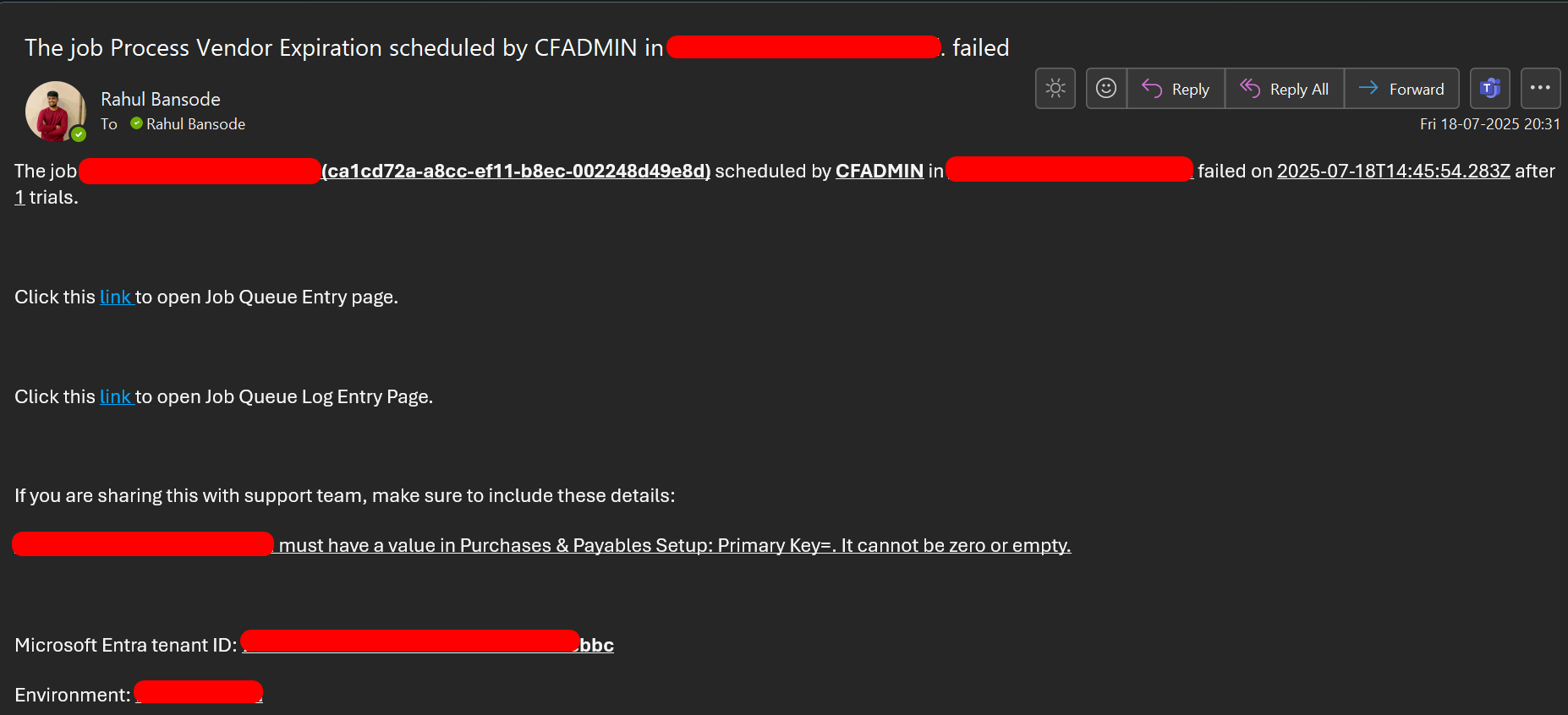

A job queue lets users set up and manage background tasks that run automatically. These tasks can be scheduled to run on a recurring schedule. For a long time, users had a common problem in Business Central when a job queue failed, there was no alert or warning. You’d only notice something was wrong when a regular task didn’t run for a few days. Some people tried to fix this by setting up another job queue to watch and restart failed ones.But that didn’t always work, especially if an update happened at the same time. Now, Microsoft has finally added a built-in way to get alerts when a job queue fails. You can get notified either inside Business Central or by using Business Events. In this blog, we’ll see the process of leveraging the Business Events to set up notifications on job queue statuses. Configuration Search for “Assisted Setup” in Business Central’s global search. Scroll down till “Set up Job Queue notifications”. Click on Next. Add the additional users who need to be notified when the job queue fails along with the job creator (if required). Choose whether you want the notification to be in-product or using Business Events (and Power Automate). I’m choosing Business Events this time and then Next. Click on Finish. Then search for Job Queue Entries and from that list page open the Job Queue Entry card. If you are using Power Automate for the first time, then it will ask you for your consent. As an Administrator, if you want to give the consent for all of the Users at once or revoke the consent for all the Users then you can do so via the Privacy Notice statuses page. Then, go back to the Job Queue Entry card and click on Power Automate again.This time, you’ll get the option to create an automated flow. In the pop up screen, you’ll get the template for a job queue entry failure notification flow. Once you click on it, it’ll ask you to sign into Business Central as well as the outlook account that’ll be used to send the emails (if different from the current user). In the next screen, you can add additional users that need to be copied on the notifications. Click on “Create Flow” and you are done! Ideally, the setup should have worked at this point—but it didn’t. After some digging, I found out that the Power Automate flow was missing some key pieces. One of the actions didn’t have the environment configured, and another action (GetUrlV3) isn’t even available in the current (v25) version of Business Central. I came across two forum thread (1) (2) about this issue, but they had no clear solution. So, as a workaround, I created a Web Service based on the Job Queue Entries Log page and used the GetRecord action in Power Automate to fetch the required data. It wasn’t too hard for me since I knew what to look for; but for a new user, this would’ve been very confusing. Also, I noticed something odd: the action that picks the email address of the person to notify was pulling it from the Contact Email field on the User Card, instead of using User Setup, which would’ve made more sense. Anyway, after all that, here’s what the final solution looks like! To conclude, setting up job queue failure alerts with Business Events is a helpful new feature in Business Central. It lets you know when background tasks fail, so you don’t have to keep checking them manually. But as we saw, the setup doesn’t always work perfectly. Some parts were missing, and a few things didn’t make sense like where it pulls the email from. If you’re familiar with Power Automate, you can fix these issues with a few extra steps. For someone new, though, it might be a bit confusing. Hopefully, Microsoft will improve the setup in future updates. Until then, this blog should help you get the alerts working without too much trouble. If you need further assistance or have specific questions about your ERP setup, feel free to reach out for personalized guidance. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfonts.com.

Share Story :

Project Contract Types in D365: Fixed Price vs Time & Material vs Milestone

When you run a project-based business-like in construction, IT, consulting, or engineering-how you charge your customers matters just as much as what you deliver. If you’re using Dynamics 365 Project Operations, you’ll need to decide how to bill your projects. Microsoft gives you three main contract types: Let’s break down what each of these means, when to use them, and how Dynamics 365 helps manage them. 1. Fixed Price – One Total Amount What is it? The customer pays a fixed amount for the full project or part of it, no matter how many hours or resources you actually use. When to use: What Dynamics 365 helps you do: Be careful: Think of this like constructing a house for a fixed price. You get paid in stages, not by the number of hours worked. 2. Time & Material – Pay as You Go What is it? The customer pays based on the hours your team works and the cost of materials used. When to use: What Dynamics 365 helps you do: Be careful: This is like a taxi ride-you pay based on how far you go and how long it takes. 3. Milestone Billing – Pay for Key Deliverables What is it? You agree on certain key points (milestones) in the project. When those are completed, the customer is billed. When to use: What Dynamics 365 helps you do: Be careful: It’s like paying an architect after each part of a building design is done—not for every hour they work. To conclude, choosing the right contract type helps you: When your billing matches your work style, profits become more predictable—and projects run smoother. Need Help Deciding? If you’re not sure which billing model is best for your business-or how to set it up in Dynamics 365 Project Operations-we’re here to help. Feel free to reach out. You can reach out to us at transform@cloudfronts.com. Let’s find the right setup for your success.

Share Story :

Essential Power BI Tools for Power BI Projects

For growing businesses, while Power BI solutions are critical, development efficiency becomes equally important-without breaking the budget. As organizations scale their BI implementations, the need for advanced, free development tools increases, making smart tool selection essential to maintaining a competitive advantage Tool #1: DAX Studio – Your Free DAX Development Powerhouse What Makes DAX Studio Essential DAX Studio is one of the most critical free tools in any Power BI developer’s arsenal. It provides advanced DAX development and performance analysis capabilities that Power BI Desktop simply cannot match. Scenarios & Use Cases For a global oil & gas solutions provider with a presence in six countries, we used DAX Studio to analyze model size, reduce memory consumption, and optimize large datasets—preventing refresh failures in the Power BI Service. Tool #2: Tabular Editor 2 (Community Edition) – Free Model Management Tabular Editor 2 Community Edition provides model development capabilities that would cost thousands of dollars in other platforms-completely free. Key Use Cases We used Tabular Editor daily to efficiently manage measures, hide unused columns, standardize naming conventions, and apply best-practice model improvements across large datasets. This avoided repetitive manual work in Power BI Desktop for one of Europe’s largest laboratory equipment manufacturers. Tool #3: Power BI Helper (Community Edition) – Free Quality Analysis Power BI Helper Community Edition provides professional model analysis and documentation features that rival expensive enterprise tools. Key Use Cases For a Europe-based laboratory equipment manufacturer, we used Power BI Helper to scan reports and datasets for common issues-such as unused visuals, inactive relationships, missing descriptions, and inconsistent naming conventions-before promoting solutions to UAT and Production. Tool #4: Measure killer Measure Killer is a specialized tool designed to analyze Power BI models and identify unused or redundant DAX measures, helping improve model performance and maintainability. Key Use Cases For a technology consulting and cybersecurity services firm based in Houston, Texas (USA), specializing in modern digital transformation and enterprise security solutions, we used Measure Killer across Power BI engagements to quickly identify and remove unused measures and columns-ensuring optimized, maintainable models and improved report performance for enterprise clients. To conclude, I encourage you to start building your professional Power BI toolkit today-without any budget constraints. Identify your biggest daily frustration, whether it’s DAX debugging, measure management, or model optimization. Once you see how free tools can transform your workflow, you’ll naturally want to explore the complete toolkit. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

Functional Cycle of Dynamics 365 Project Operations

Microsoft Dynamics 365 Project Operations (D365 PO) is an end-to-end solution designed for project-based organizations that need to manage the entire project lifecycle-from sales and estimation to delivery, time tracking, costing, and billing. It unifies capabilities from Project Management, Sales, Resource Planning, Time Tracking, and Financials into a single platform. This article outlines the complete functional cycle of D365 Project Operations, demonstrating how it supports project-based service delivery efficiently. Full Cycle of Project Operations in D365 1. Lead to Opportunity The journey begins when a potential customer expresses interest in a service. 2. Quoting & Estimation Once requirements are understood, a Project Quote is created: 3. Project Contract & Setup After customer acceptance, a Project Contract is created: 4. Project Planning Project Managers build out the work breakdown structure (WBS): 5. Resource Management Once project tasks are defined, resources are assigned: 6. Time & Expense Management Assigned resources start delivering work and logging effort: 7. Costing & Financial Tracking Behind the scenes, every time or expense entry is tracked for: 8. Invoicing & Revenue Recognition Based on approved time, expenses, or milestones: Integration Capabilities D365 PO integrates with: Reporting & Analytics Out-of-the-box dashboards include: Dynamics 365 Project Operations enables organizations to manage the full project lifecycle—from opportunity creation to revenue recognition—without fragmentation between systems or teams. Key takeaways: For project-based organizations, D365 Project Operations is not just a project management tool-it is an operational backbone for scalable, profitable service delivery. To conclude, Dynamics 365 Project Operations is most effective when viewed not as a standalone application, but as a connected operating model for project-based organizations. When implemented correctly, it bridges the traditional gaps between sales promises, delivery execution, and financial outcomes-turning projects into predictable, scalable business assets. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

What Are Databricks Clusters? A Simple Guide for Beginners

A Databricks Cluster is a group of virtual machines (VMs) in the cloud that work together to process data using Apache Spark.It provides the memory, CPU, and compute power required to run your code efficiently. Clusters are used for: Each cluster has two main parts: Types of Clusters Databricks supports multiple cluster types, depending on how you want to work. Cluster Type Use Case Interactive (All-Purpose) Clusters Used for notebooks, ad-hoc queries, and development. Multiple users can attach their notebooks. Job Clusters Created automatically for scheduled jobs or production pipelines. Deleted after job completion. Single Node Clusters Used for small data exploration or lightweight development. No executors, only one driver node. How Databricks Clusters WorkWhen you execute a notebook cell, Databricks sends your code to the cluster.The cluster’s driver node divides your task into smaller jobs and distributes them to the executors.The executors process the data in parallel and send the results back to the driver.This distributed processing is what makes Databricks fast and scalable for handling massive datasets. Step-by-Step: Creating Your First Cluster Let’s create a cluster in your Databricks workspace. Step 1: Navigate to Compute In the Databricks sidebar, click Compute. You’ll see a list of existing clusters or an option to create a new one. Step 2: Create a New Cluster Click Create Compute in the top-right corner. Step 3: Configure Basic Settings Step 4: Select Node Type Choose the VM type based on your workload. For development, Standard_DS3_v2 or Standard_D4ds_v5 are cost-effective. Step 5: Auto-Termination Set the cluster to terminate after 10 or 20 minutes of inactivity. This prevents unnecessary cost when the cluster is idle. Step 6: Review and Create Click Create Compute. After a few minutes, your cluster will turn green, indicating it is ready to run code. Clusters in Unity Catalog-Enabled Workspaces If Unity Catalog is enabled in your workspace, there are a few additional configurations to note. Feature Standard Workspace Unity Catalog Workspace Access Mode Default is Single User. Must choose Shared, Single User, or No Isolation Shared. Data Access Managed by workspace permissions. Controlled through Catalog, Schema, and Table permissions. Data Hierarchy Database → Table Catalog → Schema → Table Example Query SELECT * FROM sales.customers; SELECT * FROM main.sales.customers; When you create a cluster with Unity Catalog, you will see a new Access Mode field in the configuration page. Choose “Shared” if multiple users need to access governed data under Unity Catalog. Managing Cluster Performance and CostClusters can become expensive if not managed properly. Follow these tips to optimize performance and cost: a. Use Auto-Termination to shut down idle clusters automatically.b. Choose the right VM size for your workload. Avoid oversizing.c. Use Job Clusters for production pipelines since they start and stop automatically.d. Leverage Autoscaling so Databricks can adjust the number of workers dynamically.e. Monitor with Ganglia metrics to identify performance bottlenecks. Common Cluster Issues and Fixes Issue Cause Fix Cluster stuck starting VM quota exceeded or region issue Change VM size or region. Slow performance Too few workers or data skew Increase worker count or repartition data. Access denied to data Missing storage credentials Use Databricks Secrets or Unity Catalog permissions. High cost Idle clusters running Enable auto-termination. Best Practices for Using Databricks Clusters1. Always attach your notebook to the correct cluster before running it.2. Use development, staging, and production clusters separately.3. Keep the cluster runtime version consistent across environments.4. Terminate unused clusters to reduce cost.5. If you use Unity Catalog, prefer Shared clusters for collaboration. To conclude, clusters are the heart of Databricks.They provide the compute power needed to process large-scale data efficiently. Without them, Databricks Notebooks and Jobs cannot run. Once you understand how clusters work, you will find it easier to manage costs, optimize performance, and build reliable data pipelines. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

Time Travel in Databricks: A Complete, Simple & Practical Guide

Databricks Time Travel is a powerful feature of Delta Lake that allows you to access older versions of your data. Whether you want to debug issues, recover deleted records, compare historical performance, or audit how data changed over time—Time Travel makes it effortless. It’s like having a complete rewind button for your tables, eliminating the fear of accidental updates or deletes. What is Time Travel? Time Travel enables you to query previous snapshots of a Delta table using either VERSION AS OF or TIMESTAMP AS OF. Delta automatically versions every transaction-UPDATE, MERGE, DELETE, INSERT. So, you can always go back to an earlier state without restoring backups manually. This versioning is stored in the Delta Log, making rewind operations efficient and reliable. Why Time Travel Matters (Use Cases) Debugging Pipelines: Quickly check what the data looked like before a bad job ran. Accidental Deletes: Recover records or entire tables. Audit & Compliance: Easily demonstrate how data has evolved. Root Cause Analysis: Compare two versions side by side. Model Re-training: Use historical datasets to retrain ML models. Data Quality Tracking: Validate when incorrect data first appeared. How Delta Stores Versions (Architecture Overview) Delta Lake stores metadata and version history inside the _delta_log folder. Each commit creates a new JSON or checkpoint Parquet file representing table state. When you run a query using Time Travel, Databricks does not rebuild the entire table. Instead, it directly reads the snapshot based on the transaction log. This architecture makes Time Travel extremely fast and scalable—even on very large datasets. Time Travel Commands Query older data: SELECT * FROM table VERSION AS OF 5; SELECT * FROM table TIMESTAMP AS OF ‘2024-11-20T10:00:00’; A. Example: DESCRIBE HISTORY Below is an example of using DESCRIBE HISTORY on a Delta table. B. Querying a Specific Version Here is how you can fetch an older snapshot using VERSION AS OF. C. Restoring a Table You can restore a Delta table to any older version using RESTORE TABLE. Retention Rules Delta keeps older versions based on two configs: `delta.logRetentionDuration` → How long commit logs are stored. `delta.deletedFileRetentionDuration`→ How long old data files are retained. By default, Databricks keeps 30 days of history. You can increase this if your compliance policy requires longer retention. Best Practices – Use Time Travel for debugging pipeline issues. – Increase retention for sensitive or audited datasets. – Use `DESCRIBE HISTORY` frequently during development. – Avoid unnecessarily large retention windows—they increase storage costs. – Use `RESTORE` carefully in production environments. To conclude, time Travel in Databricks brings reliability, auditability, and simplicity to modern data engineering. It protects teams from accidental data loss and gives full visibility into how datasets evolve. With just a few commands, you can analyze, compare, or restore historical data instantly making it one of the most useful features of Delta Lake. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

Bank & Payment Reconciliation in Microsoft Dynamics 365 Business Central

In any organization, reconciling bank and payment data is critical to maintaining accurate financial records and cash visibility. Microsoft Dynamics 365 Business Central offers robust tools for Bank Reconciliation and Payment Reconciliation Journals, helping businesses match bank statements with ledger entries, identify discrepancies, and streamline financial audits. In this article, I outline how I learned to perform these reconciliations efficiently using Business Central. Bank Reconciliation ensures that transactions recorded in the bank ledger match those on the actual bank statement. Steps: Benefits: Reconciled statements are stored and can be printed or exported for documentation. Set Up Payment Reconciliation Journals The Payment Reconciliation Journal is used to match customer/vendor payments against open invoices or entries. It supports automatic suggestions and match rules for fast processing. Configuration Steps: Setup ensures the journal is ready to load incoming payments and suggest matches automatically. Use the Payment Reconciliation Journal Once the setup is done, you can use the journal to reconcile incoming payments against customer/vendor invoices. Daily Workflow: Features: Business Value Feature Value Speed Auto-matching reduces reconciliation time Accuracy Eliminates manual errors and duplicate entries Audit Ready Clear audit trail for external and internal auditors Cash Flow Clarity Real-time visibility into paid/unpaid invoices We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

Real-Time Integration with Dynamics 365 Finance & Operations Using Azure Event Hub & Logic Apps (F&O as Source System)

Most organizations think of Dynamics 365 Finance & Operations (D365 F&O) only as a system that receives data from other applications. In reality, the most powerful and scalable architecture is when F&O itself becomes the source of truth and an event producer. Every financial transaction, inventory update, order confirmation, or invoice posting is a critical business event – and when these events are not shared with other systems in real time, businesses face: So, the real question is: What if every critical event in D365 F&O could instantly trigger actions in other systems? The answer lies in an event-driven architecture using Azure Event Hub and Azure Logic Apps, where F&O becomes the producer of events and the rest of the enterprise becomes real-time listeners. Core Content Event-Driven Model with F&O as Source In this model, whenever a business event occurs inside Dynamics 365 F&O, an event is immediately published to Azure Event Hub. That event is then picked up by Azure Logic Apps and forwarded to downstream systems such as: In simple terms: Event occurs in F&O → Event is pushed to Event Hub → Logic App processes → External system is updated This enables true real-time integration across your entire IT ecosystem. Why Use Azure Event Hub Between F&O and Other Systems? Azure Event Hub is designed for high-throughput, real-time event ingestion. This makes it the perfect choice for capturing business transactions from F&O. Azure Event Hub provides: This ensures that every change in F&O is captured and made available in real time to any subscribed system. Technical Architecture Here is the architecture with F&O as the source: Role of each layer: Component Responsibility D365 F&O Generates business events Event Hub Ingests & streams events Logic App Consumes + transforms events External Systems Act on the event This architecture is:✔ Decoupled✔ Scalable✔ Secure✔ Real-time✔ Fault tolerant How Does D365 F&O Send Events to Event Hub? Using Business Events F&O has built-in Business Events Framework which can be configured to trigger events such as: These business events can be configured to push data to an Azure Event Hub endpoint. This is the cleanest, lowest-code, and recommended approach. Logic App as Event Consumer (Real-Time Processing) Azure Logic App is connected to Event Hub via Event Hub Trigger: Once triggered, the Logic App performs: Example downstream actions: F&O Event Logic App Action Invoice Posted Push to Power BI + Send email Sales Order Create record in CRM Inventory Change Update eCommerce stock Vendor Created Sync with procurement system This allows one F&O event to trigger multiple automated actions across platforms in real time. Real-Time Example: Invoice Posted in F&O Step-by-step flow: All of this happens automatically, within seconds. This is true enterprise-wide automation. Key Technical Benefits Why this Architecture is important for Technical Leaders If you are a CTO, architect, or technical lead, this approach helps you: Instead of systems “asking” for data, they react to real-time business events. To conclude, by making Dynamics 365 Finance & Operations the event source and combining it with Azure Event Hub and Azure Logic Apps, organizations can create a fully automated, real-time, intelligence-driven ecosystem. Your first step: ➡ Identify a critical business event in F&O➡ Publish it to Azure Event Hub➡ Use Logic App to trigger automatic actions This single change can transform your integration strategy from reactive to proactive. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com

Share Story :

From Legacy Middleware Debt to AI Innovation: Rebuilding the Digital Backbone of a 150-Year-Old Manufacturer

Summary A global manufacturing client was facing rising middleware costs, poor visibility, and growing pressure to support analytics and AI initiatives. A forced three-year middleware commitment became the trigger to rethink their integration strategy. This article shares how the client moved away from legacy middleware, reduced integration costs by nearly 95%, improved operational visibility, and built a strong data foundation for the future. Table of Contents 1. The Middleware Cost Problem 2. Building a New Integration Setup 3. Making Integrations Visible 4. Preparing Data for AI 5. How We Did It Savings Metrics The Middleware Cost Problem The client was running critical integrations on a legacy middleware platform that had gradually become a financial and operational burden. Licensing costs increased sharply, with annual fees rising from $20,000 to $50,000 and a mandatory three-year commitment pushing the total to $160,000. Despite the cost, visibility remained limited. Integrations behaved like black boxes, failures were difficult to trace, and teams relied on manual intervention to diagnose and fix issues. At the same time, the business was pushing toward better reporting, analytics, and AI-driven insights. These initiatives required clean and reliable data flows that the existing middleware could not provide efficiently. Building a New Integration Setup Legacy middleware and Scribe-based integrations were replaced with Azure Logic Apps and Azure Functions. The new setup was designed to support global operations across multiple legal entities. Separate DataAreaIDs were maintained for regions including TOUS, TOUK, TOIN, and TOCN. Branching logic handled country-specific account number mappings such as cf_accountnumberus and cf_accountnumberuk. An agentless architecture was adopted using Azure Blob Storage with Logic Apps. This removed firewall and SQL connectivity challenges and eliminated reliance on unsupported personal-mode gateways. Making Integrations Visible The previous setup offered no centralized monitoring, making it difficult to detect failures early. A Power BI dashboard built on Azure Log Analytics provided a clear view of integration health and execution status. Automated alerts were configured to notify teams within one hour of failures, allowing issues to be addressed before impacting critical business processes. Preparing Data for AI With stable integrations in place, the focus shifted from cost savings to long-term readiness. Clean data flows became the foundation for platforms such as Databricks and governance layers like Unity Catalog. The architecture supports conversational AI use cases, enabling questions like “Is raw material available for this production order?” to be answered from a unified data foundation. As a first step, 32 reports were consolidated into a single catalog to validate data quality and integration reliability. How We Did It Retrieve config.json and checkpoint.txt from Azure Blob Storage for configuration and state control. Run incremental HTTP GET queries using ModifiedDateTime1 gt [CheckpointTimestamp]. Check for existing records using OData queries in target systems with keys such as ScribeCRMKey. Transform data using Azure Functions with region-specific Liquid templates. Write data securely using PATCH or POST operations with OAuth 2.0 authentication. Update checkpoint timestamps in Azure Blob Storage after successful execution. Log step-level success or failure using a centralized Logging Logic App (TO-UAT-Logs). Savings Metrics 95% reduction in annual integration costs, from $50,000 to approximately $2,555. Approximately $140,000 in annual savings. Integrations across D365 Field Service, D365 Sales, D365 Finance & Operations, Shopify, and SQL Server. Designed to support modernization of more than 600 fragmented reports. FAQs Q: How does this impact Shopify integrations? A: Azure Integration Services acts as the middle layer, enabling Shopify orders to synchronize into Finance & Operations and CRM systems in real time. Q: Is the system secure for global entities? A: Yes. The solution uses Azure AD OAuth 2.0 and centralized key management for all API calls. Q: Can it handle attachments? A: Dedicated Logic Apps were designed to synchronize CRM annotations and attachments to SQL servers located behind firewalls using an agentless architecture. We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com.

Share Story :

Bridging Project Execution and Finance: How PO F&O Connector Unlocks Full Value in Dynamics 365

In a world where timing, accuracy, and coordination make or break profitability, modern project-based enterprises demand more than isolated systems. You may be leveraging Dynamics 365 Project Operations (ProjOps) to manage projects, timesheets, and resource planning and Dynamics 365 Finance & Operations (F&O) for financials, billing, and accounting. But without seamless integration, you’re stuck with manual transfers, data silos, and delayed insights. That’s where PO F&O Connector app comes in built to synchronize Project Operations and F&O end-to-end, bringing together delivery and finance in perfect alignment. In this article, we’ll explore how it works, why it matters to CEOs, CFOs, and CTOs, and how adopting it gives you a competitive edge. The Pain Point: Disconnected Project & Finance Workflows When your project execution and financial systems aren’t talking: The result? Missed revenue, resource inefficiencies, and poor visibility into project financial health. The Solution: Cloudfronts Project-to-Finance Integration App Cloudfronts new app is purpose-built to connect Project Operations → Finance & Operations seamlessly, automating the flow of project data into financial systems and enabling real-time, consistent delivery-to-finance synchronization. Key capabilities include: Role Core Benefits Outcomes CEO Visibility into project margins and outcomes; faster time to value Better strategic decisions, competitive agility CFO Automates billing, enforces accounting rules, ensures audit compliance Revenue gets recognized faster, finance becomes a strategic enabler CTO Reduces custom integration burdens, ensures system integrity Lower maintenance costs, scalable architecture Beyond roles, your entire organization benefits through: We hope you found this blog useful, and if you would like to discuss anything, you can reach out to us at transform@cloudfronts.com