How to capture the detail level logs when Azure Data Factory Pipeline fails or success

In this blog I am going to explain you how to store detail level logs when Azure Data Factory Pipeline fails or success.

First you need to create ADF_LogsRecording table using following SQL script.

CREATE TABLE [dbo].[ADF_LogsRecording](

[LogsRecordingID] [int] IDENTITY(1,1) NOT NULL,

[DataFactoryName] [nvarchar](200) NULL,

[PipelineName] [nvarchar](200) NULL,

[PipelineId] [nvarchar](200) NULL,

[PipelineStartTime] [datetime] NULL,

[ErrorCode] [nvarchar](1000) NULL,

[ErrorDescription] [nvarchar](max) NULL,

[ErrorLogTime] [datetime] NULL,

[ActivityID] [nvarchar](100) NULL,

[ActivityName] [nvarchar](200) NULL,

[ActivityStartTime] [datetime] NULL,

[ActivityEndTime] [datetime] NULL,

[ActivityDuration] [time](7) NULL,

[ActivityStatus] [nvarchar](100) NULL,

[Itemcode] [nvarchar](50) NULL,

[FrgnName] [nvarchar](100) NULL,

[U_COR_BU_TXTS] [nvarchar](max) NULL,

[U_COR_BU_TXTQ] [nvarchar](max) NULL,

[CreatedOn] [datetime] NULL,

CONSTRAINT [PK_ADF_LogsRecording] PRIMARY KEY CLUSTERED

(

[LogsRecordingID] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

GO

ALTER TABLE [dbo].[ADF_LogsRecording] ADD CONSTRAINT [DF_CreatedOn] DEFAULT (getdate()) FOR [CreatedOn]

GO

Also create a store procedure suppose you are going to use in ADF Pipeline.

CREATE PROCEDURE [dbo].[sp_ADF_LogsRecording_Insert]

(

@DataFactoryName nvarchar(200),

@PipelineName nvarchar(200),

@PipelineId nvarchar(200),

@PipelineStartTime datetime,

@ErrorCode nvarchar(1000),

@ErrorDescription nvarchar(max),

@ErrorLogTime datetime,

@ActivityID nvarchar(100),

@ActivityName nvarchar(200),

@ActivityStartTime datetime,

@ActivityEndTime datetime,

@ActivityDuration time,

@ActivityStatus nvarchar(200),

@Itemcode nvarchar(100),

@FrgnName nvarchar(200),

@U_COR_BU_TXTS nvarchar(max),

@U_COR_BU_TXTQ nvarchar(max)

)

AS

BEGIN

INSERT INTO ADF_LogsRecording

(

DataFactoryName,

PipelineName,

PipelineId,

PipelineStartTime,

ErrorCode,

ErrorDescription,

ErrorLogTime,

ActivityID,

ActivityName,

ActivityStartTime,

ActivityEndTime,

ActivityDuration,

ActivityStatus,

Itemcode,

FrgnName,

U_COR_BU_TXTS,

U_COR_BU_TXTQ

)

VALUES

(

@DataFactoryName,

@PipelineName,

@PipelineId,

@PipelineStartTime,

@ErrorCode,

@ErrorDescription,

@ErrorLogTime,

@ActivityID,

@ActivityName,

@ActivityStartTime,

@ActivityEndTime,

@ActivityDuration,

@ActivityStatus,

@Itemcode,

@FrgnName,

@U_COR_BU_TXTS,

@U_COR_BU_TXTQ

)

END

We are also creating SQL store procedure to get all record suppose you want to either insert or update in destination.

CREATE procedure [dbo].[SP_GETItem]

AS

BEGIN

SELECT itemcode,FrgnName,U_COR_BU_TXTS,U_COR_BU_TXTQ

FROM OITM WITH (NOLOCK)

END

GO

CREATE procedure [dbo].[sp_GetItemByItemCode]

(

@ItemCode nvarchar(200)

)

AS

BEGIN

SELECT ItemCode,FrgnName,U_COR_BU_TXTS,U_COR_BU_TXTQ

FROM OITM where ItemCode=@ItemCode

END

GO

Now create a pipeline

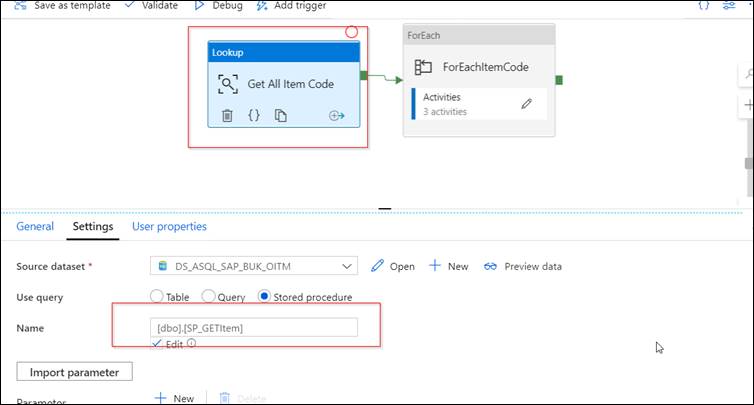

Step 1: Use Lookup activity to get all item.

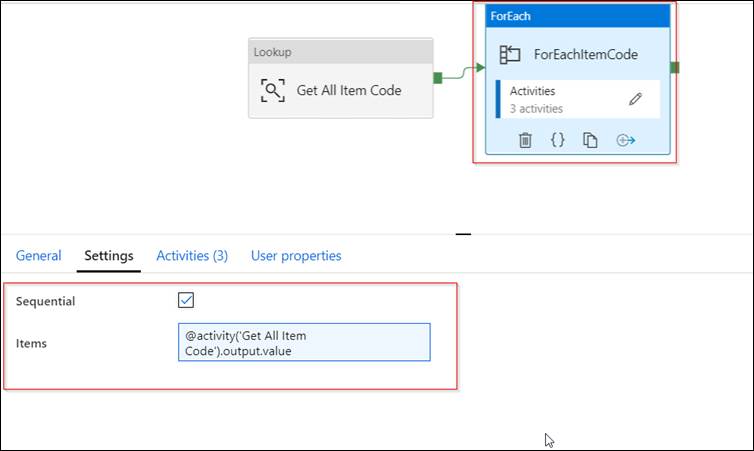

Step 2: ForEach activity should use to loop each itemcode wise, configure the settings of foreach activity

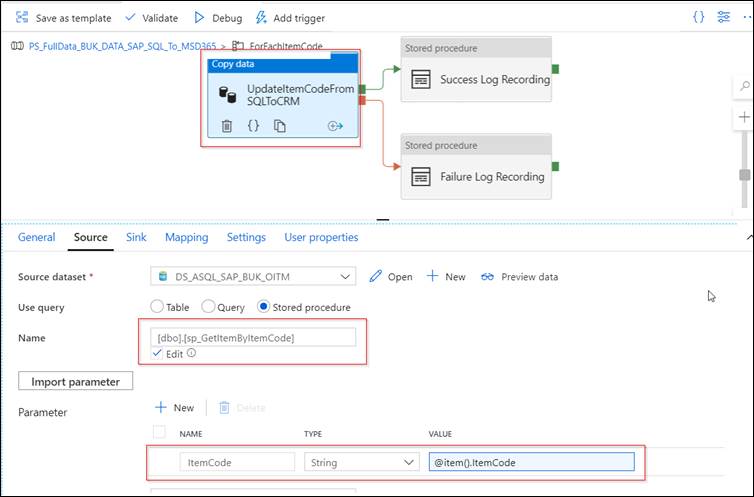

Step 3: Add a Copy activity inside ForEach activity and set source properties.

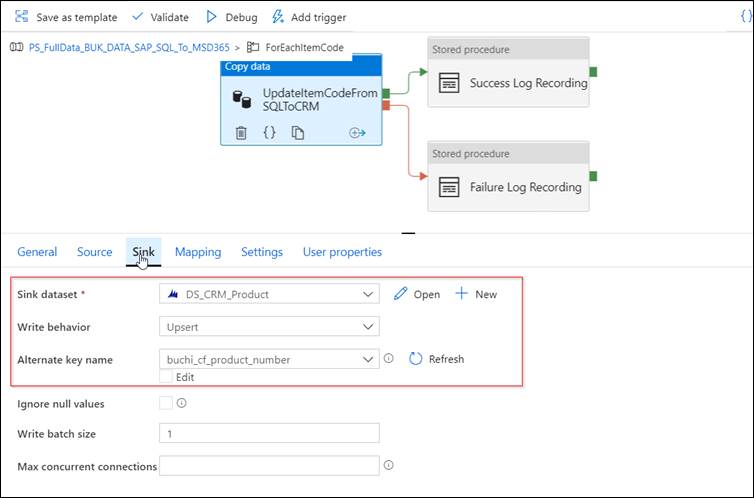

Set Sink properties as per below screenshot.

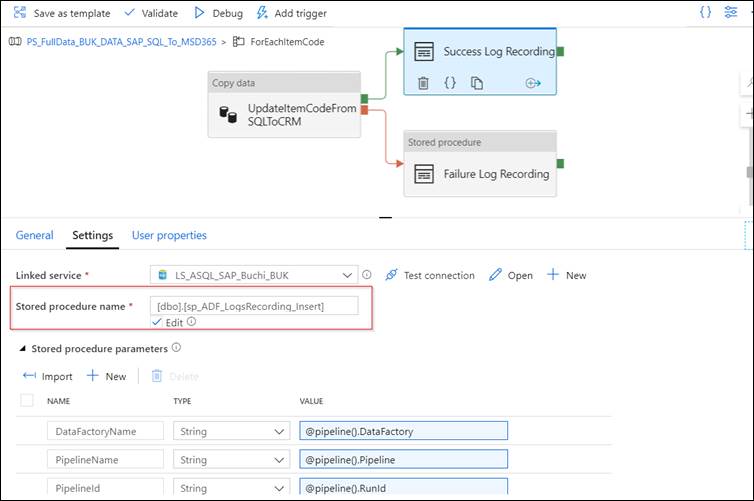

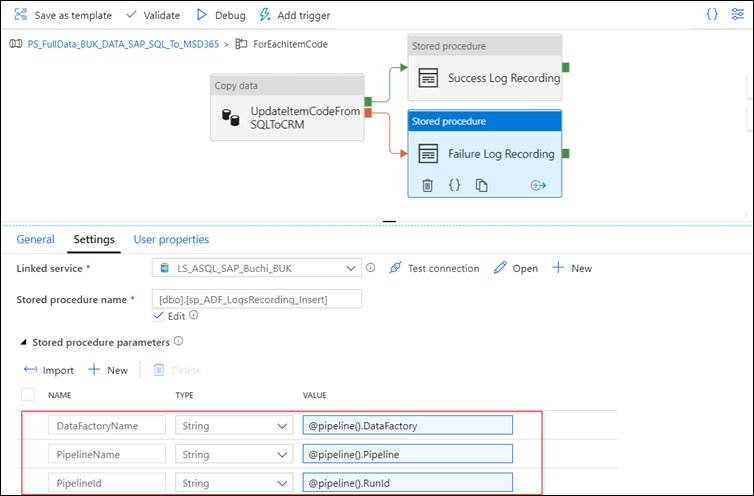

Step 4: Add Store procedure activity for both Success and Failure of Copy activity.

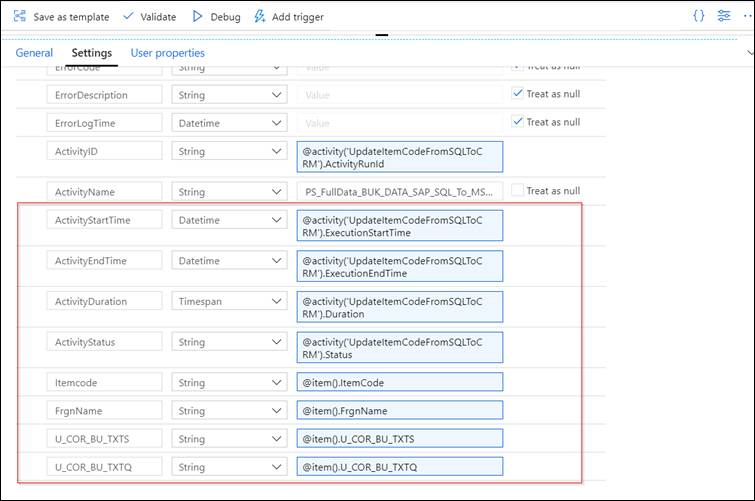

Step 5: Add store procedure activity for success and configure properties as per below screenshot.

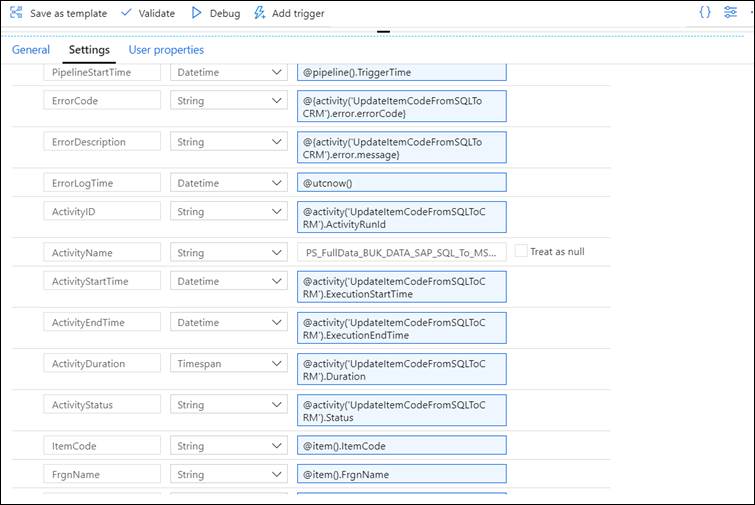

Step 6: Add store procedure activity for failure and configure properties as per below screenshot.

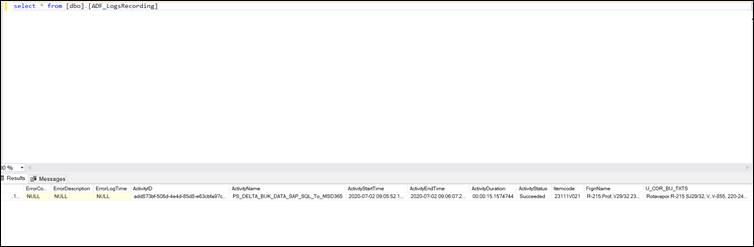

Now when we run the pipeline, based on failure or success of pipeline record level logs were stored in table.

I hope this will help you.